Jul 17, 2025

Anthar Study: Evaluating AI Coding Agents Beyond Benchmarks

May 14, 2025

A. Jawwad, Advaith, Akshit, Aniketh, Ashwin, Chiranjeevi, Divyansh, Joel, Manideep, Murugan, Phanindra, Shriya, Waseem

Overview

The evolution of AI coding assistants from basic autocomplete tools to sophisticated autonomous agents capable of understanding complex software engineering tasks has significantly transformed the landscape of software development. However, the crucial challenge today isn’t simply assessing whether AI agents can generate code, but determining if and how they genuinely contribute value within human-centric, security-conscious, and production-level environments. Current benchmarks such as SWE-Bench Verified and SWE-PolyBench, though valuable, exhibit notable limitations in evaluating the practical usability, security awareness, and integration of these agents into realistic developer workflows.

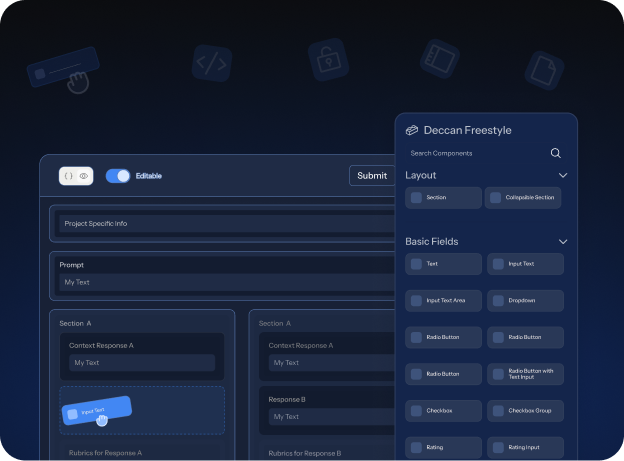

Addressing this gap, we introduce the Deccan AI Anthar Study, a thorough, human-validated evaluation to analyze the effectiveness of six prominent autonomous coding agents: Aider, SWE-agent, Cursor, OpenHands, Windsurf, and Devin. The Anthar Study Verified uniquely emphasizes real-world scenarios, closely examining each agent’s performance beyond mere code correctness, and assesses their suitability for practical development contexts. This blog presents the study’s rationale, methodology, key findings, and implications, offering insights that inform and enhance the next generation of AI-assisted coding technologies.

Leaderboard

Why Build Another Benchmark? The Need for Anthar Study Verified

While SWE-Bench Verified serves as the go-to lightweight benchmark for Python regression checks, and SWE-PolyBench stresses multilingual breadth across Java, JavaScript, TypeScript, and Python, we saw an opportunity to focus on aspects often overlooked:

- Multilingual realism: Covering both Python and JavaScript with plans to add more languages.

- Developer experience metrics: Introducing human-validated rubrics that measure helpfulness, planning quality, and security—not just binary test pass/fail.

- Deployment-mode relevance: Separately benchmarking headless pipeline-compatible agents and interactive IDE-integrated copilots, reflecting how developers actually use these tools.

- Reproducibility and transparency: Publishing base commit hashes, ground-truth diffs, and a deterministic evaluation harness for end-to-end verification.

By addressing these gaps, Anthar Study Verified aims to provide a verified, multi-dimensional lens to better understand and improve the next generation of AI coding assistants, ensuring they are more reliable, and aligned with real-world developer needs.

Introducing the Anthar Verified Dataset and Study Methodology

Our study is built upon the Anthar Verified Dataset, a collection of 43 curated samples derived directly from resolved GitHub Pull Requests (PRs) in real-world Python (22 samples) and JavaScript (21 samples) open-source repositories.

Dataset Characteristics:

- Real-world Authenticity: Each sample represents a genuine development task – fixing a bug, adding a new feature, or implementing an enhancement. The distribution in our dataset is 23 enhancements, 10 bug fixes, and 10 new features.

- Diverse Codebases: Samples were selected from repositories with active contribution histories (over 200 commits), manageable sizes (50MB to 300MB to avoid excessive build times), and importantly, existing test suites. We prioritized PRs that included changes to tests alongside the main code changes.

- Verified Ground Truth: The “ground truth” for each task is the actual code changes made by the human developers in the original merged GitHub PR. This captures domain-specific patterns and provides authentic context that synthetic benchmarks often lack.

Dataset Creation Methodology:

We created the dataset using a meticulous process that involved multiple annotators:

- Sample Curation: Experienced Python and JavaScript developers manually screened thousands of GitHub PRs based on criteria like task type, repository health, presence of tests, and relevance.

- Prompt Creation: For each selected PR, a clear, unambiguous coding prompt was created by expert developers. These prompts were derived solely from the ground truth patch and PR context, structured in a “Given… When… Then…” format to define requirements precisely. This ensures the agent is working from a realistic problem description.

- Multi-Annotator Validation: Both the created prompts and the subsequent agent evaluations were reviewed and validated by multiple experienced developers, adding a crucial layer of human verification to the dataset.

Our Comprehensive Evaluation Rubrics

To assess agents comprehensively, we developed six key rubrics, reflecting both technical performance and practical usability:

- Correctness: Does the code correctly solve the task and pass relevant tests? (Evaluated via automated tests and diff comparison to ground truth).

- Helpfulness: How much human effort does the agent’s solution save? (Subjective, structured human judgment).

- Plan Creation: How logical and sound is the agent’s initial approach or plan?

- Plan Execution: How well does the agent follow its own plan and implement the required changes?

- File Localization: Does the agent correctly identify and modify the necessary files?

- Security Vulnerability: Does the agent introduce security issues? (Assessed using tools like Bandit for Python and analysis against OWASP principles).

How the Study Was Conducted

We set up testing environments by reverting repositories to the commit just before the evaluated PR. We then ran each agent with the human-created prompt. We configured all agents to use Claude Sonnet-3.7 as the underlying LLM where possible.

- Pipeline Agents (Aider, SWE-agent, OpenHands): Run headlessly via scripts, designed for automation.

- IDE-Integrated Agents (Cursor, Windsurf, Devin): Required more manual setup or wrappers to log their interactions and final outputs (plan and code changes).

We applied the agent’s proposed code changes to the repository snapshot. We then ran the original repository’s test suite and security analysis tools (like Bandit for Python). Finally, our trained human evaluators assessed the agent’s performance against the six rubrics.

.png)

Key Results from the Anthar Study

Our evaluation revealed a diverse landscape of capabilities among the agents, highlighting specific strengths and common areas for improvement. No single agent proved universally superior across all tasks and metrics.

Quantitative Highlights:

- We measured correctness based on passing original test suites and correlation with the verified ground truth patches.

- For Python, Devin showed the highest correctness, with a strong correlation (95.3%) to the ground truth patches. OpenHands and Cursor followed. Devin particularly excelled on enhancement tasks.

- For JavaScript, Aider achieved the highest correctness and correlation (89.2%), significantly outperforming others like OpenHands and SWE-agent in this language domain. Aider was the top performer across all JS task types (bug fix, enhancement, feature).

Performance Breakdown by Language & Qualitative Insights:

- Python Agents: Devin demonstrated strong general performance, achieving high scores consistently across different task types, especially enhancements. OpenHands also performed well overall, with human evaluators noting strong planning and execution, good build success rates, and better alignment with ground truth patches qualitatively compared to some others. Cursor often produced good plans but struggled with execution and code quality (build/lint errors).

- JavaScript Agents: Aider was the standout performer in JavaScript, leading quantitatively in correctness across task types. Aider also performed very well in File Localization, likely benefiting from its design which allows developers to guide the agent on relevant files. SWE-agent consistently showed lower performance compared to most other agents in this study, across both languages.

- IDE Agent Observation (Data Leakage): During runs with IDE-based agents (Cursor, Windsurf, Devin), we observed instances of potential context leakage, where information from previous tasks (file paths, variable names) seemed to influence current task execution. This highlights a challenge in managing agent state in multi-task scenarios, potentially leading to cross-task contamination or unintended information leakage.

Visualizing the Results:

We visualized the study results using:

- Stacked Bar Charts: Showing the distribution of human rubric scores (e.g., Correct, Mostly Correct, Incorrect) for each agent across dimensions like Correctness, Helpfulness, Planning, Execution, and File Localization, separately for Python and JavaScript. These charts illustrate the proportion of success vs. failure for each agent on specific criteria.

- Spider Web (Radar) Plots: Providing a holistic view of each agent’s aggregate performance across all evaluated dimensions in a single chart. This allowed for quick visual comparison of overall profiles, highlighting agents’ relative strengths and weaknesses across the board.

- Spider Web (Radar) Plots: Providing a holistic view of each agent’s aggregate performance across all evaluated dimensions in a single chart. This allowed for quick visual comparison of overall profiles, highlighting agents’ relative strengths and weaknesses across the board.

Common Challenges Observed Across Agents:

Beyond individual performance, the study highlighted below listed systemic challenges:

- Maintainability & Code Proliferation: Agents often generated overly complex solutions, modified too many files unnecessarily, or created redundant components. This introduces technical debt and makes the code harder for humans to understand and maintain.

- Dependency Handling: Agents frequently struggled with correctly managing project dependencies and integrating changes seamlessly into the existing architecture.

- Incomplete Solutions: Missing edge cases, lack of comprehensive test coverage, and neglecting cross-platform considerations were common issues.

- Dependence on Test Suites: The ability to evaluate agent correctness was heavily reliant on the robustness and completeness of the original repository’s test suite.

Limitations and Future Work

This study represents a significant step, but it has limitations. The dataset size (43 samples) is a starting point; expanding it across more languages and task types (e.g., large refactoring, API integrations) is crucial for greater statistical confidence. While our human evaluation is structured and verified, subjectivity remains. We plan to enhance this process with more granular rubrics and formal inter-annotator agreement measures. Future work will also incorporate cost-efficiency metrics (runtime, token usage) and expand security analysis tools for other languages.

Implications for Development

Our findings offer practical implications for both developers building these agents and software engineers using them:

- For Agent Developers: Prioritize code quality, maintainability, and architectural alignment alongside correctness. Implement features that guide agents toward minimal, targeted changes and leverage existing codebase components. Design systems that prevent context leakage in multi-task workflows.

- For Software Engineers:

- Careful Task Selection: Offload routine, well-defined tasks (simple bugs, basic features) but retain ownership of complex architectural changes, performance optimizations, and tasks requiring deep system understanding.

- Effective Prompt Engineering: Treat prompts as structured requirements documents. Be explicit about requirements, edge cases, desired code quality, and even suggest specific files to modify. Requesting a plan upfront can also improve outcomes.

- Invest in Test Suites: A comprehensive, robust test suite is your safety net. It’s essential for reliably validating agent-generated code and catching issues early.

Conclusion

The Anthar Study Verified provides a detailed, multi-dimensional view of the current capabilities of leading AI coding agents. While agents like Devin and Aider show impressive potential for correctness in their respective domains, and OpenHands demonstrates strong overall execution, our findings confirm they are not yet autonomous replacements for human developers. They are powerful tools with limitations, requiring careful task selection, robust prompting, strong testing, and continued human oversight to ensure code quality, maintainability, and security. These are essential to harness AI effectively.

We envision Anthar evolving to cover more languages, broader tasks, and refined evaluation criteria to fuel innovation in building more reliable, secure, and helpful AI coding assistants.

Reach out to us at hey@deccan.ai for more information, work samples, etc.

Explore other blogs

View all

.png)