July 17, 2025

Decoding Model Intelligence: A Deep Dive into Mathematical Reasoning and Evaluation Metrics

November 10, 2025

.png)

Introduction

How effectively can today’s top language models handle complex mathematical reasoning? To answer that, we conducted a comprehensive evaluation of three leading AI models - Gemini 2.5 Pro, Claude Sonnet 4.5, and GPT 5 across 339 mathematically intricate tasks.

This study goes beyond accuracy. It uncovers how models think, analyzing their reasoning, conceptual understanding, and communication clarity. The goal: to pinpoint where each model shines, where it stumbles, and how consistently it reasons under pressure.

Why This Analysis Matters

Modern AI models are impressive problem-solvers but complex, multi-step reasoning remains a challenge.Often, a model might:

- Arrive at the right answer for the wrong reasons, or

- Follow solid reasoning but make tiny computational errors, or

- Present correct logic and results, but in a confusing, verbose explanation that hampers learning.

This study was designed to capture these nuances measuring not only what models get right but how they communicate their reasoning, ensuring both accuracy and clarity are evaluated together.

Designing the Evaluation Framework

Taxonomy of Questions

The 339 tasks covered diverse mathematical and scientific domains - Calculus, Algebra, Geometry, and Probability & Statistics and were classified into three core types:

- Formula Substitution: Direct or sequential application of formulas using given or derived data.

- Interdisciplinary Reasoning: Integration of multiple scientific or mathematical principles.

- Non-Traditional Concept Application: Creative reasoning beyond standard or formulaic methods.

Dataset Creation Principles

Each question and answer in the dataset was carefully designed to be:

- Novel and unambiguous, leading to a unique, verifiable final answer.

- Independent of external computational tools like MATLAB or Python to avoid bias.

- LaTeX-formatted to ensure uniform comprehension across all models.

The Human Element: Subject Matter Experts Persona

Evaluation was not left to automation alone. A panel of Subject Matter Experts (SMEs) PhDs and researchers from top Indian universities meticulously reviewed every model-generated response. Their expertise ensured both technical accuracy and pedagogical soundness in the evaluations.

Methodology: End-to-End Evaluation Process

The evaluation followed a structured workflow:

- Expert Validation: Datapoints (Question and answers pairs) were verified or corrected by SMEs.

- Sequential Review: Model responses were assessed in order — Gemini 2.5 Pro → Claude Sonnet 4.5 → GPT 5.

- Error Tagging: Each step in the solution was compared with the expert’s reasoning to identify and classify specific issues.

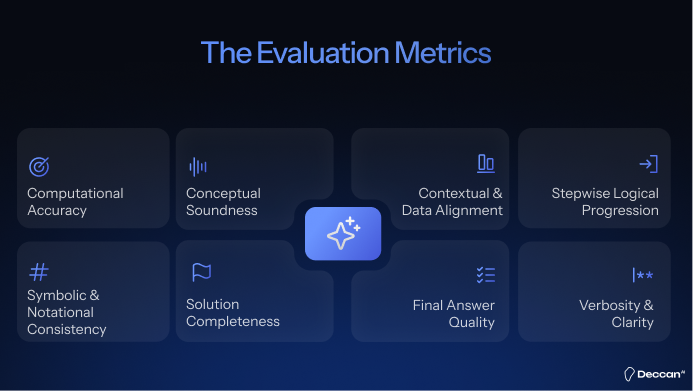

The Evaluation Metrics

Each response was scored across eight dimensions, rated as No Issue, Minor Issue, or Major Issue depending on impact and severity:

- Computational Accuracy – Correctness in arithmetic, algebraic manipulation, and unit conversion.

- Conceptual Soundness – Correct identification and application of underlying principles or theorems.

- Contextual and Data Alignment – Proper interpretation of the problem and adherence to constraints.

- Stepwise Logical Progression – Coherence and logical flow from problem to conclusion.

- Symbolic and Notational Consistency – Uniform use of symbols, variables, and notation.

- Solution Completeness – Addressing all necessary sub-cases and steps.

- Final Answer Quality – Accuracy, clarity, and formatting of the final output.

- Verbosity and Clarity – Balance between explanation depth and conciseness.

Results and Insights

Overall Model Performance:

- Gemini 2.5 Pro led with an 86.69% "No Issues" rate, outperforming Claude Sonnet 4.5 (79.65%) and GPT 5 (78.98%).

- Across all 8,136 evaluations, 81.77% of responses showed no issues, 13.11% had minor issues, and 5.11% had major issues.

Error Scores (weighted):

- Gemini 2.5 Pro: 0.1641 → 91.8% error-free rate

- GPT 5: 0.2485 → 87.57% error-free rate

- Claude Sonnet 4.5: 0.2876 → 85.62% error-free rate

Category-Wise Model Comparison

.png)

Model Weaknesses

- Gemini 2.5 Pro: Struggles with verbosity and clarity (35.99%), and occasionally with final answer formatting (14.45%).

- Claude Sonnet 4.5: Faces challenges in final answer accuracy (26.55%) and conceptual soundness (23.89%).

- GPT 5: Exhibits significant verbosity issues (80.53%), affecting readability despite strong reasoning ability.

Conclusion: Choosing the Right Model for the Right Task

Each model brings unique strengths and specific weaknesses to mathematical problem-solving:

- For general mathematical tasks:

Gemini 2.5 Pro offers the most balanced and dependable performance across all dimensions. - For accuracy-first use cases:

GPT 5 excels in computational precision and conceptual soundness ideal when clarity can be handled separately. - For education and explanation-heavy contexts:

Claude Sonnet 4.5 stands out for its verbosity and narrative clarity, making it useful in teaching or explanatory scenarios despite mathematical inconsistencies.

Across domains Algebra, Calculus, Geometry, and Statistics Gemini 2.5 Pro consistently leads, minimizing the need for domain-specific tuning.

Yet, none of the models are flawless. While Gemini 2.5 Pro demonstrates overall reliability, Claude Sonnet 4.5’s conceptual errors and GPT 5’s verbosity underline a crucial point: mathematical intelligence in AI still requires a balance between correctness, clarity, and coherence.

As the field advances, hybrid approaches that combine the strengths of multiple models may well become the standard, merging the precision of GPT, the clarity of Claude, and the consistency of Gemini.

Mathematical reasoning is more than computation; it's structured logic, clarity, and explanation. Through this analysis, we’re one step closer to understanding how AI can not only solve problems but think and teach more like humans.

Reach out to us at hey@deccan.ai for more information, work samples, etc.

Explore other blogs

View all