WebApp Study: Evaluating AI-Native Web Application Builders

June 24, 2025

Abhishek, Advaith, Ashwin, Divyansh, Janya, Manideep, Nishanth, Priya, Safoora, Saksham, Saloni, Suyash

.png)

Overview

AI-native web app builders are now essential for quickly creating websites and apps, making it faster and easier for anyone even without technical skills to build and launch them. Despite their growing popularity, a significant gap exists between these tools' marketing claims and their real-world performance. Limited research1 offers neither thorough evaluations nor reliable data to guide users in selecting the appropriate tools. Additionally, no public, vendor-neutral scorecards exist to objectively compare these tools. This absence of thorough, independent research underscores the need for a comprehensive evaluation of AI-native web application builders to better understand their limitations, and to drive necessary improvements.

The WebApp Study Verified addresses this gap by establishing a structured framework to rigorously evaluate five leading AI-native web application builders - Lovable, Bolt, v0, Firebase GenAI Studio, and Replit. These platforms are assessed across seven production critical criteria: functionality, visual appeal, error handling, responsiveness, content quality, navigation, and data consistency. By incorporating expert human annotation, this approach ensures a thorough evaluation of both technical accuracy and user experience.

This research gives product designers and front-end engineers solid data to help them choose the right tools. It also highlights critical areas for improvement - notably in architectural understanding and error handling for agent developers, grounding evaluation in transparent evidence instead of marketing claims.

.png)

Leaderboard

.png)

Why is the WebApp Study Verified Needed?

Key industry insights2 and external evidence underscore the urgent need for the WebApp Study Verified, which evaluates AI-native web app builders. The goal is to provide persuasive and critical bullet points that resonate with frontend engineers, product designers, and independent builders:

- Lack of Independent Benchmarks: No public, vendor-neutral scorecards currently exist. The WebApp Study fills this gap through rubric-based, real-world testing.

- Marketing vs Reality: Claims of 'one-prompt production apps' often fail when handling complex tasks. The study exposes these performance limits.

- Deployment Risks: AI-generated code can introduce critical bugs, as evidenced by real-world weekly outages caused by LLM-coded deployments.

- Non-Technical Barriers: ‘No-code' tools frequently require developer intervention for advanced use cases. Lack of clear capability visibility can lead to team misalignment, inefficiencies, or improper tool usage.

By addressing these gaps, WebApp Study Verified aims to provide a verified, multi-dimensional lens to better understand and improve the next generation of AI coding assistants, ensuring they are more reliable, and aligned with real-world developer needs.

Introducing the Human Evaluated Dataset and Study Methodology

Our study is built upon the Human Evaluated Dataset, a collection of 53 curated tasks derived directly from real-world development scenarios.

Taxonomy Defining Dataset Characteristics:

- Real-World Task Fidelity - No synthetic prompts; each entry reflects a deployable, commonly requested product feature or app specification.

- Domain Diversity - Covers eight major application domains, including transactional systems, content platforms, and operational tools. This range ensures that agents are tested against a broad spectrum of user needs and industry-relevant use cases.

- Scalable Complexity - Each use case is modeled at three functional levels (Easy, Medium, Hard), enabling gradient-based performance analysis across agent capabilities.

- Component Variance: Included modules:

- Authentication

- Routing/Navigation

- CRUD with real-time updates

- Role-based dashboards

- Search/Filter logic

- Accessibility and Mobile responsiveness

- These components are the basic building blocks of modern web apps. Including them helps us thoroughly test how well each builder can handle real development needs.

This taxonomy ensures that the dataset covers a broad spectrum of real-world applications, providing a robust foundation for testing.

.png)

Dataset Creation Methodology:

We created such a dataset using a meticulous process that involved multiple annotators:

- Sample Curation: We curated the dataset by cross-referencing real-world application development needs based on market demand and technical feasibility. Each task was selected for its relevance to current web application requirements, covering domains such as e-commerce, productivity tools, and customer engagement platforms.

- Prompt Creation: Expert developers and product designers crafted each task prompt to reflect realistic developer intent with clarity and conciseness. Prompts were designed to align with typical product requirement documents, ensuring precise specifications for the AI builder. Task complexity was calibrated across three tiers: Easy (static UI), Medium (dynamic workflow basic back-end), and Hard (complex workflows with simulated backend logic).

- Multi-Annotator Validation: A panel of frontend engineers, product designers, and independent builders rigorously validated the dataset. These experts reviewed both the prompts and the generated AI outputs to ensure they adhered to realistic design and functionality. Three annotators scored each task independently to enhance the dataset's reliability and eliminate any bias or error in judgment.

.png)

Annotator Persona

We carefully selected and trained annotators to ensure evaluation quality and impartiality, as described below.

.png)

How the Study Was Conducted?

Each WebApp builder was tasked with completing these assignments using concise, well-defined instructions aimed at evaluating both reasoning and architectural understanding. Outputs were deployed and evaluated in live environments using a seven-part rubric covering functionality, user experience, error handling, responsiveness, content quality, navigation, and data consistency.

All evaluations were performed blind and independently scored by human experts across disciplines-front end engineers with design principle-to ensure unbiased, multi-perspective performance analysis.

.png)

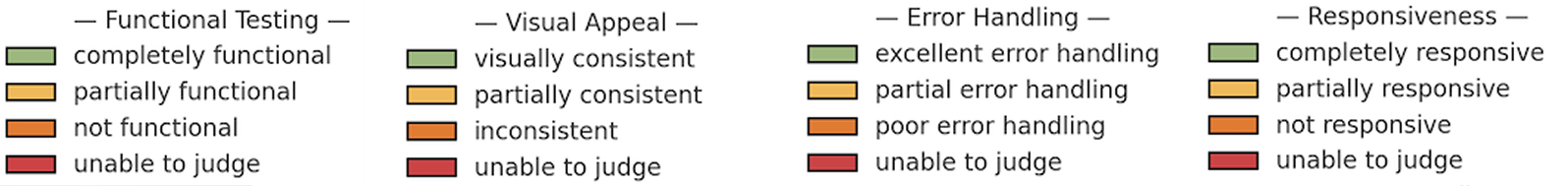

Our Comprehensive Evaluation Rubrics

To assess agents comprehensively, we developed seven key rubrics, reflecting both technical performance and practical usability. We applied these rubrics in our study, and our evaluation revealed insightful quantitative and qualitative findings.

Key Results from the WebApp Study Verified

Our evaluation revealed a diverse landscape of capabilities among the agents, highlighting specific strengths and common areas for improvement. No single agent proved universally superior across all tasks and metrics.

Complexity-wise insights:

.png)

Observational Insights:

- Performance Decline with Complexity: Several agents exhibit a noticeable decline in scores from Easy to Hard tasks, particularly in rubrics such as Functional Testing, Error Handling, and Responsiveness.

- Domain Consistency: Across all eight categories, agents showed overall similar performance patterns with no domain-specific spikes or drops.

- Lovable stands out for its reliability, functional consistency, and polished design, making it suitable for both simple and complex projects.

- Bolt performs well for basic tasks but struggles with advanced customization.

- v0 offers flexibility in functionality and error handling, it executes multi-step logic well although it needs design improvements.

- Firebase excels in full-stack applications but is hindered by occasional bugs and inconsistent design.

- Replit provides flexibility but suffers from frequent errors and inconsistent outputs, requiring significant refinement.

- Top-performing Tools:

- v0 maintains the highest functional testing and navigation scores across all complexities, indicating stronger multi-step logic and complex workflow handling.

- Lovable excels in Visual Appeal and Content Quality, showing robust design consistency even as complexity grows.

Visualizing the Results:

We visualized the results using stacked bar charts, bar charts by complexity, and radar plots to illustrate agent performance across various metrics. These visual aids highlight key strengths and weaknesses among the builders.

- Stacked Bar Charts: Showing the distribution of human rubric scores (e.g. completely functional, partially functional, non-functional etc) for each agent across dimensions like Functional testing, Visual Appeal, Responsiveness, Content quality, Error Handling, Navigation and Data Consistency. These charts illustrate each agent's success-to-failure ratio across specific criteria.

%20(1).png)

- Bar Charts: The bar chart maps agent performance across task complexities (Easy, Medium, Hard). It highlights capability drop-offs, resilience under load, and helps identify which agents are suited for simple prototypes versus complex applications.

- Spider Web (Radar) Plots: Lovable and Bolt consistently score the highest across most categories, while Replit lags behind, especially in Content Quality and Visual Appeal.

Systemic Limitations Across Agents:

Despite the above insights, our study highlights several systemic limitations observed across the agents.

- Limited Backend Capabilities: Except for Firebase and v0, most evaluated AI-native web builders rely primarily on front-end frameworks. This limits their ability to handle complex, server-side logic or multi-page routing without additional backend setup.

- Lack of Flexibility: Some agents, like Replit, are framework-agnostic, whereas others (e.g., Firebase, v0) are locked into specific ecosystems. This creates vendor lock-in and restricts the ability to freely integrate with third-party services or frameworks.

- Performance Degradation on Complex Tasks: Agents struggled with advanced use cases, including real-time updates, state management, and external data integration. The complexity of the task often led to degraded performance, especially when multi-step workflows were required.

Future Work

This study represents a significant step, but it has limitations. While the 53-task dataset provides a solid foundation, it should be expanded to include additional architectures and task types (e.g., large-scale refactoring, complex API integrations) for greater statistical confidence. Despite structuring and verifying our human evaluation, some subjectivity remains. We plan to refine the process by introducing more granular rubrics and formal inter-annotator agreement metrics (e.g., Cohen's Kappa). In future work, we will also incorporate cost-efficiency metrics (runtime, token usage) and expand our security analysis tools to support other languages.

Implications for Development

Our findings offer practical implications for both developers building these agents and software engineers using them:

- For Agent Developers:

- Improved Architecture Support: Developers must enhance tools to handle more complex app architectures, such as multi-page routing and server-side logic.

- Error Handling: Agents need better error messaging and handling to improve user clarity and prevent data exposure.

- Cross-Platform Responsiveness: Developers must ensure better support for responsive designs across various devices and screen sizes.

- Reduced Vendor Lock-in: Tools should be less dependent on proprietary ecosystems, allowing flexibility in integration and framework usage.

- For Product Designers:

- Tool Selection: Designers should align their choice of AI web app builder with project goalsfor instance, using Bolt for rapid prototyping or Lovable for design-centric projects.

- UX Adjustments: Given the limitations in design quality, designers must refine AI-generated outputs to ensure visual consistency and user-friendly interfaces.

- Collaboration with Developers: Designers should work closely with developers to address gaps in functionality and design, particularly when AI tools fall short in complex tasks.

Conclusion

The WebApp Study Verified highlights the varied capabilities of AI-native web application builders, emphasizing Lovable's design consistency, Firebase GenAI Studio's full-stack support, and v0's functional execution. However, no single tool demonstrates comprehensive reliability across all evaluated dimensions. Quantitative and observational insights reveal consistent weaknesses in error handling, data consistency, and cross-device responsiveness, especially under complex task conditions.

Replit underperforms across most metrics, whereas Lovable and Bolt excel visually but lack backend robustness. These builders should be regarded as assistive tools rather than replacements for developers. Their effectiveness depends on matching the tool to the task, selective adoption, and continuous human oversight. Their outputs often require refinement to meet real-world standards of functionality, usability, and design integrity.

As detailed in the “Limitations and Future Work” sections, tool-specific constraints and task scope limit current capabilities. Consider these builders as assistive systems-not developer replacements-whose effectiveness depends on precise task matching, selective adoption, and ongoing human oversight.

Appendix

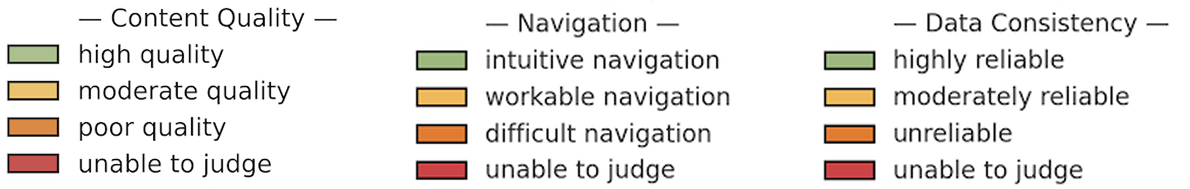

Rational on using Agent Achievement Scoring (Mean-Ratio %)

References

- WebApp1k:Benchmark for Web App Development - https://arxiv.org/abs/2408.00019

- Key Industry Insights - https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai?utm_source=chatgpt.com

- Utlization - https://wearesocial.com/wp-content/uploads/2023/03/Digital-2023-Global-Overview-Report.pdf

- AI WebApp Builder Comparison – Models, and Versions (2025)

.png)

Reach out to us at hey@deccan.ai for more information, work samples, etc.

Explore other Research

View all

.png)

.png)