New

New

Train and evaluate your agents in enterprise-grade RL environments

Stress-test AI agents inside domain-calibrated RL environments that mirror real enterprise workflows, system states, and failure modes.

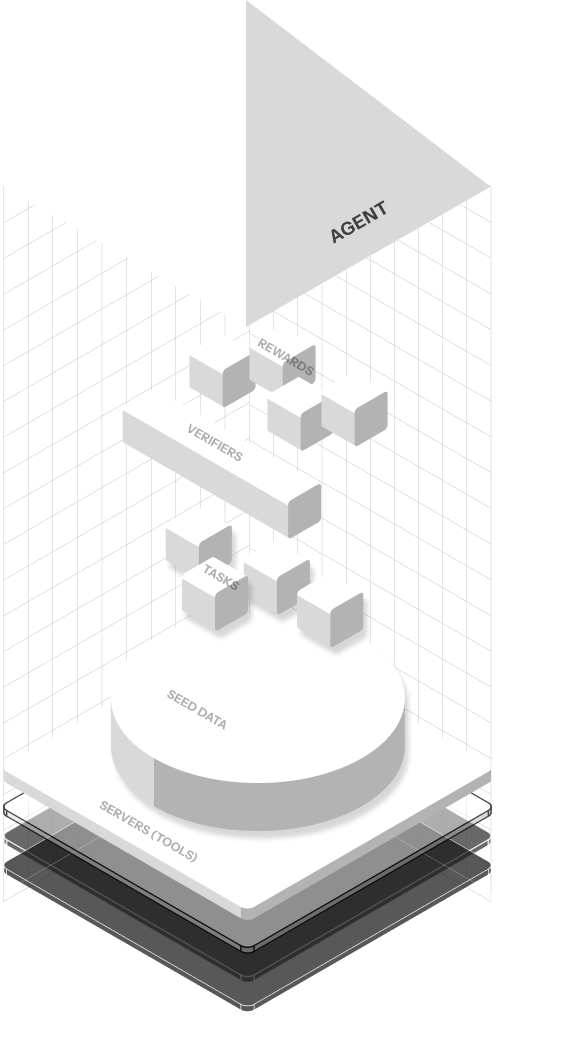

The STARK RL gym

A playground designed to break AI agents without production risk

Simulated enterprise servers

The RL environment

Train and evaluate agents

.png)

.png)

.png)

Use Cases

Whether it’s multi-app operation, code execution, or research, the STARK RL gym reflects the complexity enterprises actually want from an agent training playground.

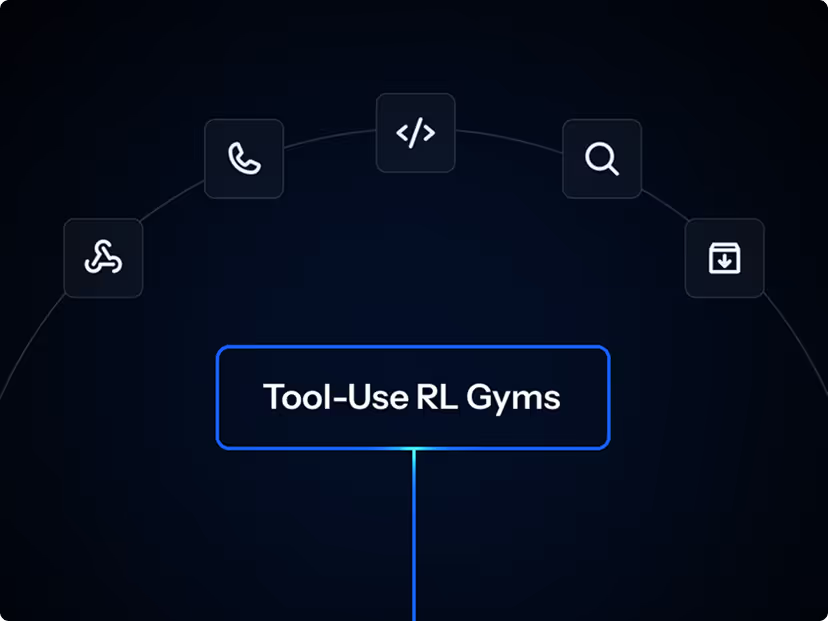

Tool-use RL gyms (mirrored enterprise servers)

Browser-use RL gyms (Web/DOM)

Computer-use RL gyms (OS/GUI)

Coding/Software Engineering RL gyms

.avif)

.avif)

.avif)

The quality bar that shapes the STARK RL gym

High-Trust, Not “Trust Us” Data

Every trajectory is reviewed for correctness, grounding, and realism — and verified using both human audits and automated checks.

Quality by Design, Not Post-Hoc Inspection

Prompts, scenarios, and environments all have thresholds. Bad logic never makes it to training.

Scale Only When Ready

We only scale past pilot when annotation, replayability, and verifier precision are locked in.

Enterprise-Grade Stability

All environments are tested for determinism, state drift, and load behavior, so your training signals stay stable.

Safety & Alignment Built In

We include failure cases and corrections to help models learn what not to do, not just what success looks like.

High-Trust, Not “Trust Us” Data

Every trajectory is reviewed for correctness, grounding, and realism — and verified using both human audits and automated checks.

Quality by Design, Not Post-Hoc Inspection

Prompts, scenarios, and environments all have thresholds. Bad logic never makes it to training.

Scale Only When Ready

We only scale past pilot when annotation, replayability, and verifier precision are locked in.

Enterprise-Grade Stability

All environments are tested for determinism, state drift, and load behavior, so your training signals stay stable.

Safety & Alignment Built In

We include failure cases and corrections to help models learn what not to do, not just what success looks like.

Custom environments

We create custom RL gym setups that model client-specific systems across:

Web

APIs

OS

GUI

Code Environements

Each setup generates replayable golden trajectories and human-reviewed execution traces, with built-in controls and escalation paths aligned to governance requirements, while maintaining research-grade rigor.

FAQ(s)

How realistic are your environments?

How are the scenarios created for the environments?

Can I inspect all data?

Do you support custom environments?

Train agents in RL environments where failure is injected by design

Get access to env

.svg)

.png)